Cranmer, K., Brehmer, J., & Louppe, G. (2020). The frontier of simulation-based inference. Proceedings of the National Academy of Sciences, 117(48), 30055–30062.

Lavin, A., Krakauer, D., Zenil, H., Gottschlich, J., Mattson, T., Brehmer, J., Anandkumar, A., Choudry, S., Rocki, K., Baydin, A. G., et al. (2021). Simulation intelligence: Towards a new generation of scientific methods. arXiv Preprint arXiv:2112.03235.

Lee, M. D., & Wagenmakers, E.-J. (2013). Bayesian Cognitive Modeling: A Practical Course. Cambridge University Press.

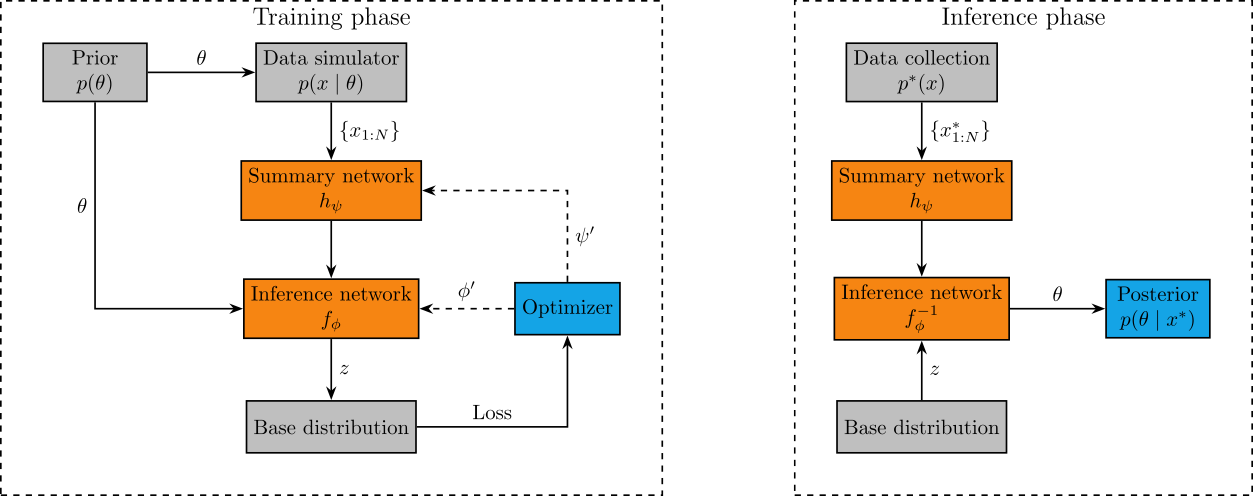

Radev, S. T., Mertens, U. K., Voss, A., Ardizzone, L., & Köthe, U. (2020). BayesFlow: Learning complex stochastic models with invertible neural networks. IEEE Transactions on Neural Networks and Learning Systems, 33(4), 1452–1466.

Radev, S. T., Schmitt, M., Pratz, V., Picchini, U., Koethe, U., & Buerkner, P.-C. (2023).

JANA: Jointly amortized neural approximation of complex bayesian models.

The 39th Conference on Uncertainty in Artificial Intelligence.

https://openreview.net/forum?id=dS3wVICQrU0

Radev, S. T., Schmitt, M., Schumacher, L., Elsemüller, L., Pratz, V., Schälte, Y., Köthe, U., & Bürkner, P.-C. (2023).

BayesFlow: Amortized bayesian workflows with neural networks. arXiv.

https://arxiv.org/abs/2306.16015