Code

import osif "KERAS_BACKEND" not in os.environ:# set this to "torch", "tensorflow", or "jax" "KERAS_BACKEND" ] = "jax" import matplotlib.pyplot as pltimport numpy as npimport bayesflow as bf

INFO:bayesflow:Using backend 'jax'

Here we will revisit the basic binomial model:

\[\begin{equation}

\begin{aligned}

\theta & \sim \text{Beta}(1, 1) \\

k & \sim \text{Binomial}(\theta, n).

\end{aligned}

\end{equation}\]

And use it to generate important distributions.

Prior and prior predictive distributions

The prior and prior predictive distributions are generated jointly by the simulator.

Code

def prior():return dict (theta= np.random.beta(a= 1 , b= 1 ))def likelihood(theta):return dict (k= np.random.binomial(n= 15 , p= theta))= bf.make_simulator([prior, likelihood])

Code

= simulator.sample(5000 )= samples["theta" ]= samples["k" ]

Posterior distribution

To obtain the posterior distribution, we need to define a posterior approximator and train it.

Code

= ("theta" , lower= 0 , upper= 1 )"theta" , "inference_variables" )"k" , "inference_conditions" )

Code

= bf.BasicWorkflow(= simulator,= adapter,= bf.networks.CouplingFlow()

Code

= workflow.fit_online(epochs= 20 , batch_size= 512 )

Once trained, we can apply it to obtain the posterior for a specific data, e.g., \(k=1\) .

Code

= dict (k= np.array([[1 ]]))= workflow.sample(num_samples= 5000 , conditions= inference_data)= posterior["theta" ][0 ,:,0 ]

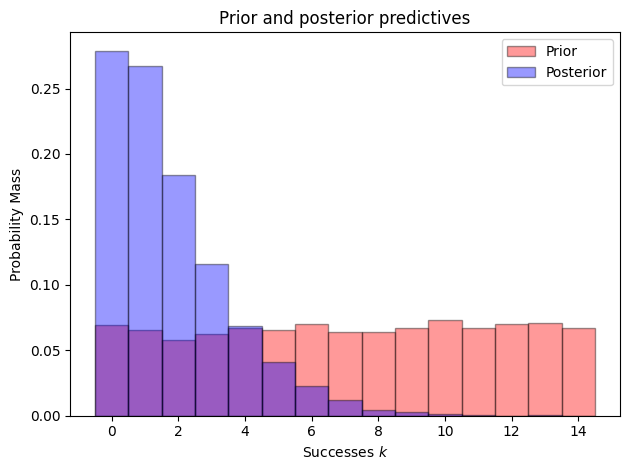

Posterior predictive distribution

Can be generated from the simulator, using the posterior distribution of the parameters.

Code

= simulator.sample(5000 , theta= posterior)["k" ]

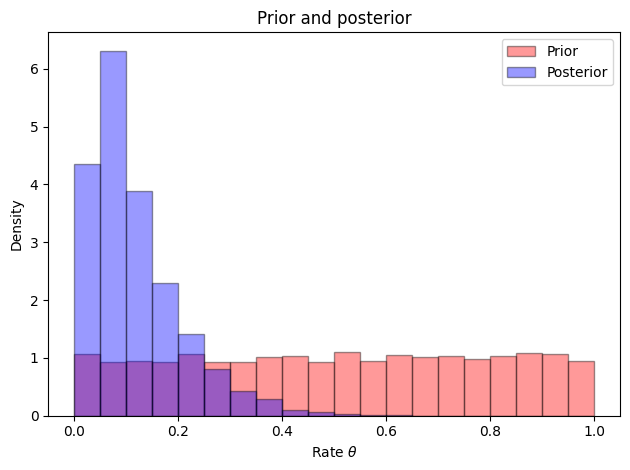

Now we can also plot the distributions: First we plot the prior and posterior distributions of the parameter, and then the prior and posterior predictive distributions.

Code

= "Prior" , alpha= 0.4 ,= True , color= "r" , edgecolor= "black" , bins= np.arange(0 , 1.05 , 0.05 ))= "Posterior" , alpha= 0.4 ,= True , color= "b" , edgecolor= "black" , bins= np.arange(0 , 1.05 , 0.05 ))"Prior and posterior" )"Rate $ \\ theta$" )"Density" )

Code

= "Prior" , alpha= 0.4 ,= True , color= "r" , edgecolor= "black" , bins= np.arange(- 0.5 , 15.5 ))= "Posterior" , alpha= 0.4 ,= True , color= "b" , edgecolor= "black" , bins= np.arange(- 0.5 , 15.5 ))"Prior and posterior predictives" )"Successes $k$" )"Probability Mass" )

References

Lee, M. D., & Wagenmakers, E.-J. (2013). Bayesian Cognitive Modeling : A Practical Course . Cambridge University Press.