Code

import osif "KERAS_BACKEND" not in os.environ:# set this to "torch", "tensorflow", or "jax" "KERAS_BACKEND" ] = "jax" import matplotlib.pyplot as pltimport numpy as npimport bayesflow as bf

INFO:bayesflow:Using backend 'jax'

The first problem in this chapter involves inferring a binomial rate:

\[\begin{equation}

\begin{aligned}

\theta & \sim \text{Beta}(1, 1) \\

k & \sim \text{Binomial}(\theta, n).

\end{aligned}

\end{equation}\]

Simulator

We will amortize over different sample sizes, so we will also draw randonly \(n\) during simulations.

Code

def context():return dict (n= np.random.randint(1 , 101 ))def prior():return dict (theta= np.random.beta(a= 1 , b= 1 ))def likelihood(n, theta):return dict (k= np.random.binomial(n= n, p= theta))= bf.make_simulator([context, prior, likelihood])

Approximator

Code

= ("theta" , lower= 0 , upper= 1 )"theta" , "inference_variables" )"k" , "n" ], into= "inference_conditions" )

Code

= bf.BasicWorkflow(= simulator,= adapter,= bf.networks.CouplingFlow()

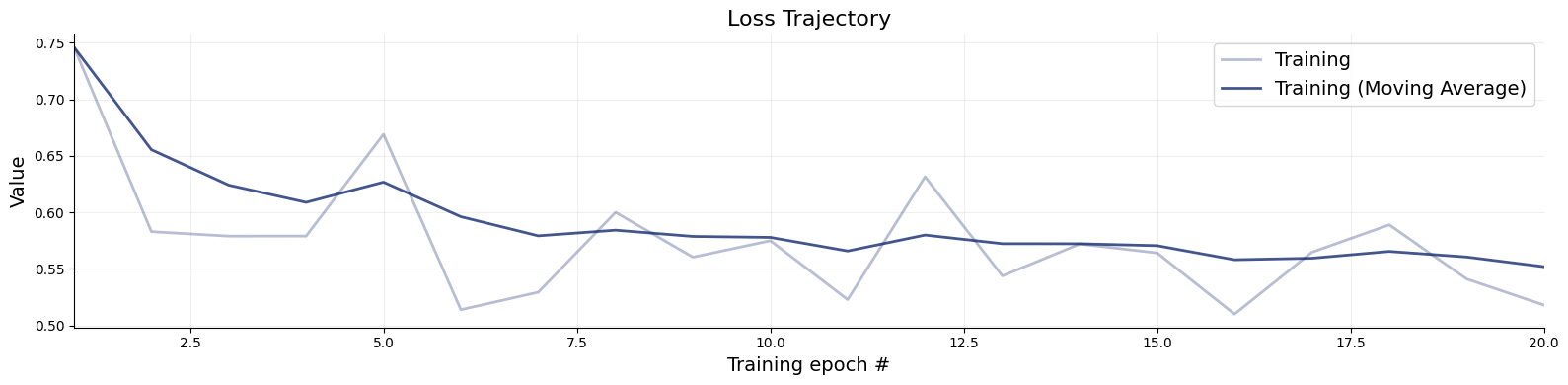

Training

Code

= workflow.fit_online(epochs= 20 , batch_size= 512 )

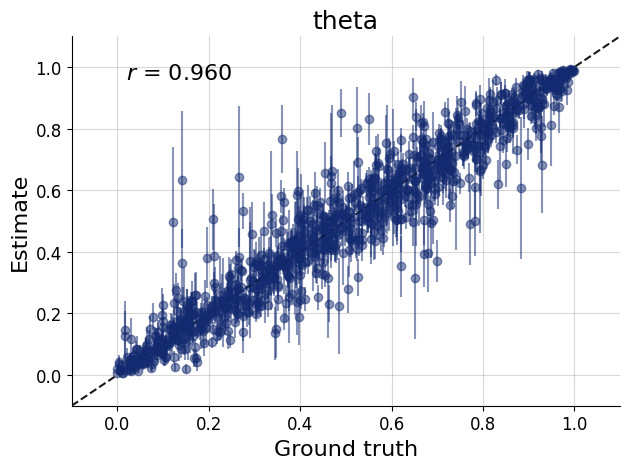

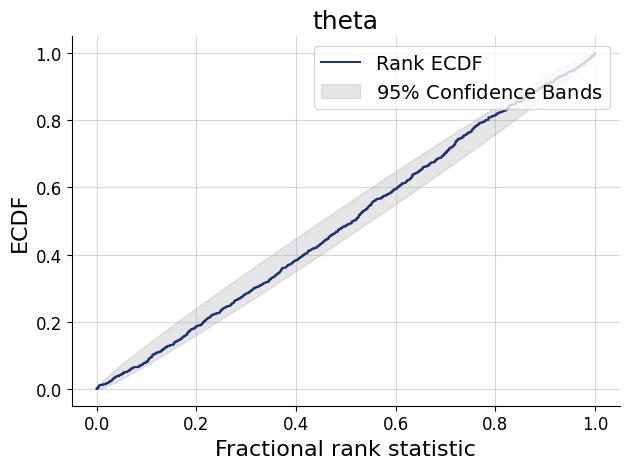

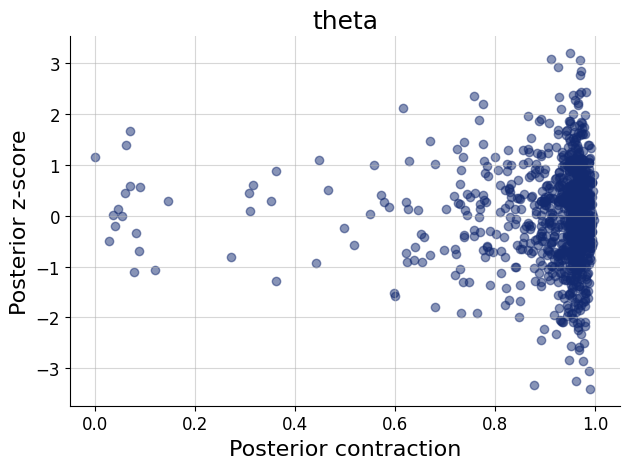

Validation

Code

= simulator.sample(1000 )= workflow.plot_default_diagnostics(test_data= test_data, num_samples= 500 )

Inference

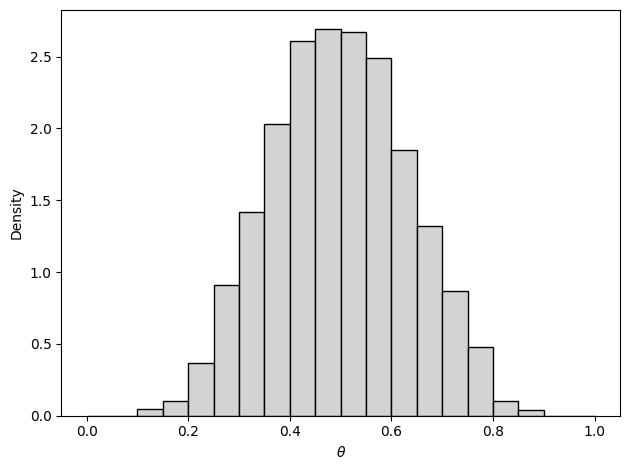

Now we obtain the approximation of the posterior distribution of \(\theta\) given \(k=5\) and \(n=10\)

Code

= dict (= np.array([[5 ]]),= np.array([[10 ]])

Code

= workflow.sample(num_samples= 2000 , conditions= inference_data)

Code

count

2000.000000

mean

0.498038

std

0.134124

min

0.125993

25%

0.401482

50%

0.496852

75%

0.591911

max

0.870781

Code

"theta" ].flatten(), density= True , color= "lightgray" , edgecolor= "black" , bins= np.arange(0 , 1.05 , 0.05 ))r" $ \t heta $ " )"Density" )

References

Lee, M. D., & Wagenmakers, E.-J. (2013). Bayesian Cognitive Modeling : A Practical Course . Cambridge University Press.